Have you ever spoken a command to your phone and just marveled at the result? You ask it to set a timer while your hands are covered in flour, or to navigate you through an unfamiliar city, and it just… works. It feels like a little bit of magic in your pocket. But what’s really happening when you say, “Hey Google, what’s the weather today?” How does a machine translate your spoken words, with all their nuances and accents, into a precise action? It’s not magic; it’s a fascinating and complex dance of artificial intelligence working in perfect harmony.

My name is Zain Mhd, and for the better part of five years, I’ve been diving deep into the world of AI. My work involves breaking down these complex technologies to see how they truly function and impact our daily lives. My fascination comes from a place of pure curiosity—a desire to understand the logic behind the systems we now take for granted. This journey has been less about formal credentials and more about hands-on exploration, driven by a passion for sharing what I’ve learned in a way that everyone can understand.

Let’s pull back the curtain on the smart assistant in your pocket. We’ll break down the entire process, step-by-step, from the moment you speak a command to the second your phone answers back. You’ll see that behind that simple voice interaction is a powerful AI system designed to listen, understand, and act.

The First Step: Hearing the Wake Word

Before your assistant can do anything, it has to know you’re talking to it. This is where the “wake word” comes in—phrases like “Hey Siri,” “Alexa,” or “Okay Google.” You might think your phone is constantly recording everything you say, but that’s not quite how it works. It would drain your battery in no time and create huge privacy issues.

Instead, your phone uses a highly specialized, low-power processor that is always on. Its only job is to listen for one specific sound pattern: the wake word. Think of it like a guard dog that’s trained to sleep through most noises but perks up its ears the moment it hears its name. This processor doesn’t understand language; it just recognizes the specific acoustic pattern of the trigger phrase.

Here’s the thing: only after it detects that wake word does the device “wake up” its main processors and start actively listening to and recording your command for further analysis. This is a clever way to conserve power and respect user privacy.

Key Points about Wake Word Detection:

- Always On, Low Power: It uses a tiny amount of energy, so it doesn’t kill your battery.

- Pattern Recognition, Not Understanding: The chip isn’t listening to your conversations. It’s just waiting for a very specific soundwave pattern.

- The “False Alarm”: This is why sometimes a word on TV or in a conversation that sounds like the wake word can accidentally trigger your assistant. The pattern was just close enough to trick the system.

From Sound to Text: The Speech-to-Text Conversion

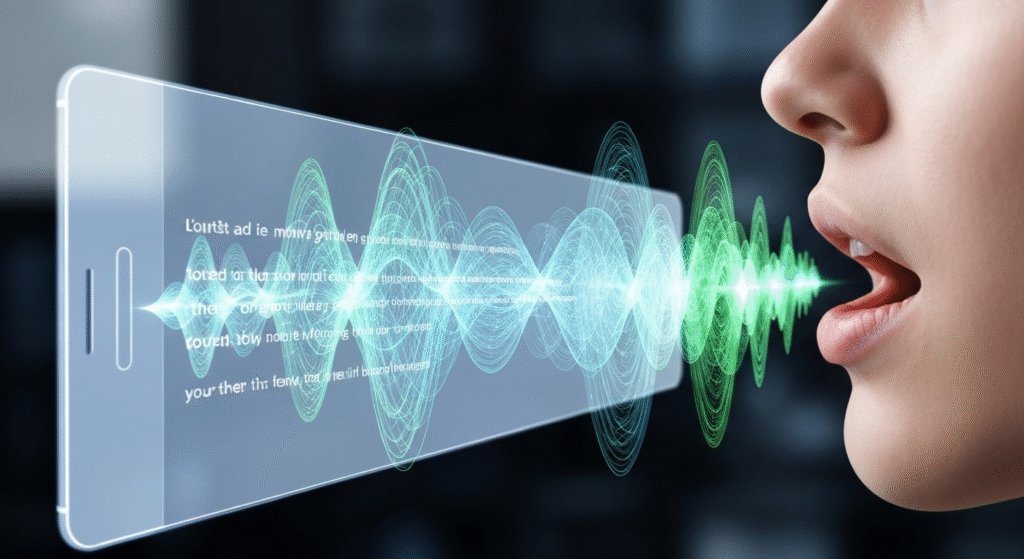

Once your phone is awake and listening, the next challenge is to convert your spoken words into digital text. This process is called Speech-to-Text (STT) or Automatic Speech Recognition (ASR). This is where the heavy lifting begins, and it’s a step that has improved dramatically over the past decade.

When you speak, you create soundwaves. The phone’s microphone captures these waves and the AI breaks them down into tiny segments called phonemes—the smallest units of sound in a language (like the ‘k’ sound in “cat”). The AI then uses complex machine learning models, which have been trained on millions of hours of spoken language, to analyze these phonemes.

It compares the sequence of sounds from your voice to its vast library of words and sentences. It then calculates the most probable sequence of words that could have produced those sounds. This is why a good internet connection helps; your phone often sends this audio clip to a powerful server in the cloud for a more accurate and faster analysis than your device could handle on its own.

I remember working with early voice recognition software about five years ago, and it was a frustrating experience. You had to speak slowly and clearly, and even then, it would often get things wrong. Today, the accuracy is incredible, capable of understanding fast speech, different accents, and even mumbling.

| Feature | Early Speech Recognition | Modern AI-Powered Speech Recognition |

| Accuracy | Low to moderate. Often required users to “train” the software to their voice. | Very high. Can understand a wide range of accents and speech patterns without training. |

| Processing | Primarily done on the local device, making it slow and resource-intensive. | Primarily cloud-based, allowing for faster and more complex analysis. |

| Natural Language | Struggled with conversational speech, slang, and varied sentence structures. | Excels at understanding natural, conversational language and context. |

| Noise Handling | Poor. Background noise easily interfered with accuracy. | Advanced. Uses noise-cancellation algorithms to isolate the speaker’s voice. |

The Real Genius: Understanding Your Intent

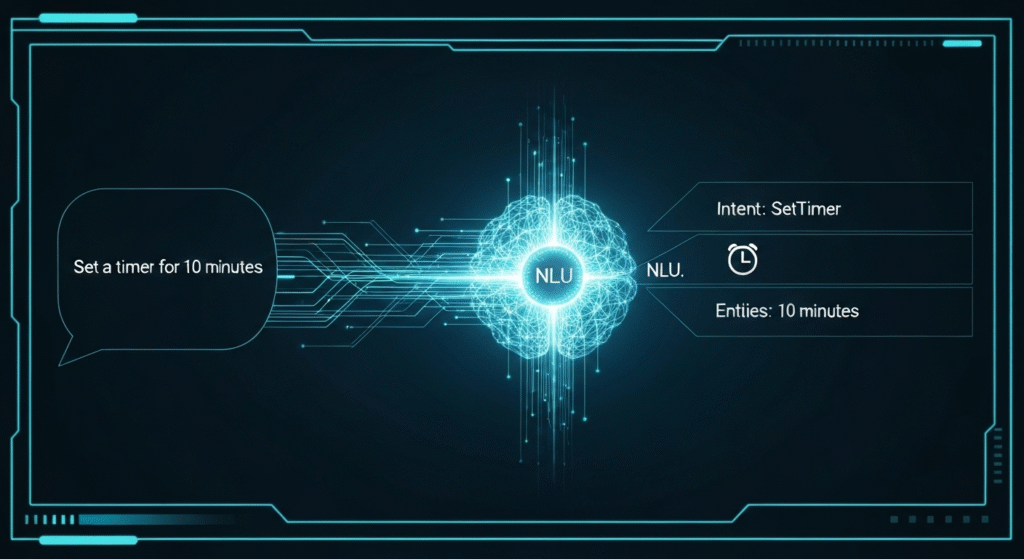

Converting your speech to text is a technical marvel, but it’s only half the battle. The text “set a ten-minute timer” is useless unless the AI can understand what you mean. This is the job of Natural Language Understanding (NLU), and it’s arguably the most critical part of the entire process. NLU is the “brain” that figures out your goal.

NLU breaks down your command into two key components: intent and entities.

Identifying Your Goal (Intent Recognition)

The intent is the core action you want the assistant to perform. The AI is trained to categorize your request into a specific command bucket.

- When you say, “What’s the capital of Japan?” the AI recognizes the intent as

GetFactorAnswerQuestion. - If you say, “Play some relaxing music,” the intent is

PlayMusic. - If you say, “Remind me to buy milk tomorrow,” the intent is

CreateReminder.

The AI sifts through the sentence structure and keywords to make an educated guess about the primary goal of your command. This is why you can phrase the same request in many different ways. “Wake me up at 6 AM,” “Set an alarm for 6 AM,” and “I need to get up at 6 AM” all lead the AI to the same SetAlarm intent.

Extracting the Key Details (Entity Extraction)

Once the intent is clear, the AI needs to pull out the specific pieces of information required to fulfill that intent. These are called entities. They are the crucial details that give the command context.

- In “Set an alarm for 7:30 AM,” the entity is

7:30 AM. - In “Play some relaxing music,” the entity is

relaxing music. - In “What’s the weather like in Paris?” the entity is

Paris.

Let’s break down a few examples to see how this works in practice.

| Your Voice Command | Recognized Intent (The Goal) | Extracted Entities (The Details) |

| “Show me the fastest route to the airport.” | GetDirections | fastest (route type), airport (destination) |

| “Text my mom that I’ll be late for dinner.” | SendMessage | mom (recipient), I'll be late for dinner (message content) |

| “Add coffee to my shopping list.” | UpdateList | coffee (item), shopping (list name) |

| “How tall is Mount Everest?” | GetFact | Mount Everest (subject) |

I once tried to ask an assistant to “play some rock music” while I was driving near a large quarry. The NLU got confused by the background noise and my phrasing, and instead of opening my music app, it started navigating me to a local business called “Plymouth Rock & Gravel.” It was a funny reminder of just how complex language can be and how the AI has to sort through ambiguity to find the most probable intent.

From Understanding to Action: Executing the Command

The final step is to take the recognized intent and entities and turn them into a concrete action. At this stage, the smart assistant acts as a middleman, communicating your request to the appropriate application or service on your phone.

This is usually done through Application Programming Interfaces (APIs), which are like special channels that let different apps talk to each other.

- If your intent is

SetTimerand the entity is15 minutes, the assistant sends a command to your phone’s Clock app API to start a new timer. - If your intent is

GetDirectionsand the entity isthe nearest gas station, the assistant tells your Maps app API to search for gas stations and calculate a route. - If your intent is

SendMessage, the assistant uses the Messages app API to compose and send the text.

If the request requires information from the internet, like a weather forecast or a fun fact, the assistant sends the query to a search engine or a specific data service (like a weather provider). Once it gets the answer back, it uses a Text-to-Speech (TTS) engine to convert the text-based information into the natural-sounding voice you hear as a response.

Why Your Assistant Keeps Getting Smarter

The entire process, from wake word to action, happens in just a couple of seconds. But the truly amazing part is that it’s constantly improving. Smart assistants are powered by machine learning, which means they learn from experience.

Every command you give (and millions of others from users around the world) provides a new data point. This data, which is anonymized to protect privacy, is used to retrain the AI models. This feedback loop helps the system get better at:

- Recognizing speech more accurately, especially with unusual accents or slang.

- Understanding intent even when it’s phrased in a new or ambiguous way.

- Expanding its capabilities by learning new types of requests and intents.

This is why an assistant that struggled with a certain command a year ago might handle it perfectly today. It’s not just that the software was updated; the underlying AI model has learned and evolved.

Frequently Asked Questions

Is my smart assistant always recording everything I say?

No. The device is only listening for the wake word using a low-power processor. It only begins to record and process your speech for a few seconds after it hears that specific trigger phrase to understand and respond to your command.

Why does my assistant sometimes activate by mistake?

This happens when a word or phrase sounds acoustically similar to the wake word. The low-power detector is designed for efficiency, not perfect accuracy, so it can sometimes be triggered by a “false positive.”

Can I use my voice assistant without an internet connection?

For some basic, on-device commands, yes. Things like setting a timer, opening an app, or playing music stored on your phone can often be done offline. However, for any request that requires up-to-date information (like weather, search queries, or directions), an internet connection is needed to contact the powerful cloud-based servers.

How does the AI handle so many different accents and languages?

The AI models are trained on massive and diverse datasets that include speech from millions of people from different regions, speaking various languages and dialects. This vast exposure allows the system to learn the different phonetic patterns and sentence structures associated with each accent, making it robust enough to understand a global user base.

Conclusion

The next time you ask your phone for a quick fact or to send a text, take a moment to appreciate the incredible journey your words just took. In a matter of seconds, they were heard, converted from sound to text, analyzed for meaning and intent, and translated into a digital action. What feels like a simple conversation is actually a powerful demonstration of artificial intelligence at its most practical.

It’s not some unknowable magic. It’s a logical, four-step process: wake word detection, speech-to-text conversion, natural language understanding, and command execution. Each step is a field of study in itself, but when combined, they create a seamless experience that has fundamentally changed how we interact with technology. As these systems continue to learn and evolve, this interaction will only become more natural, more helpful, and more integrated into our daily lives.