Have you ever looked at a photo you took on your phone and been genuinely amazed? The colors are vibrant, the details are sharp, and the blurry background in a portrait looks like it was shot with a professional camera. It’s easy to wonder how such a tiny lens can produce such incredible results. The answer isn’t just in the glass; it’s in the powerful artificial intelligence working silently behind the scenes. This technology is called computational photography, and it has completely changed what’s possible with the camera in our pocket.

My name is Zain Mhd, and I’ve been exploring the world of AI for years, driven by a passion for understanding how this technology is reshaping our daily lives. As someone who has been working in this field for over five years, I’ve seen AI move from abstract concepts to practical tools we use every day. My goal has always been to break down these complex topics, like computational photography, into clear, simple explanations. This article isn’t just a technical breakdown; it’s a look into the magic that happens every time you tap the shutter button.

What Exactly Is Computational Photography?

At its core, computational photography uses algorithms and AI to overcome the physical limitations of a small smartphone camera. A traditional camera relies almost entirely on its hardware—the size of the sensor and the quality of the lens—to capture a single good image. Your phone takes a completely different approach.

Instead of trying to capture one perfect shot, your phone captures a burst of multiple images and a massive amount of data in an instant. Then, its powerful processor acts like a digital darkroom, using AI to analyze, combine, and enhance that data to construct a final image that is often better than any single frame it captured. Think of it as having a team of tiny, super-fast photo editors living inside your phone, making decisions in a split second to produce the best possible picture.

This fundamental difference is what allows your phone to compete with much larger, more expensive cameras. It’s a shift from relying on pure optics to leveraging intelligent software.

Traditional vs. Computational Photography

Let’s break down the key differences. This shift in thinking is the primary reason for the huge leap in phone camera quality over the last several years.

| Feature | Traditional Photography | Computational Photography |

| Primary Focus | Optics (lens quality, sensor size) | Software algorithms and processing |

| Capture Process | A single frame of light is captured per shot | Multiple frames and data points are captured |

| Key Element | The quality of the physical hardware | The phone’s processing power and AI models |

| Final Result | A direct representation of the scene | An intelligently enhanced and optimized image |

Semantic Segmentation: How AI Sees Your Photo

One of the most powerful tools in computational photography is semantic segmentation. This is a fancy term for a simple but brilliant concept: the AI identifies and labels every single part of your photo. Before it makes any adjustments, the AI looks at the image and says, “This is a face, that’s the sky, this part is a tree, and that’s a dog.”

It essentially creates a pixel-by-pixel map of your photo, understanding the context of the scene. This is a huge leap from older photo filters that would apply the same effect across the entire image, often leading to unnatural results.

Why Does This Matter for Your Pictures?

Because the AI knows what it’s looking at, it can make much smarter decisions. I first noticed this on a hiking trip a few years back. When I took a picture of my friend with the mountains behind him, the phone’s camera software subtly brightened his face, sharpened the detail in the mountains, and made the blue sky a little more vibrant—all automatically. It didn’t just boost the saturation of the whole picture; it applied unique, targeted edits to each element. That’s semantic segmentation in action.

This technology allows your phone to perform incredibly specific enhancements:

- Selective Adjustments: It can make the sky bluer without turning a person’s skin blue. It can enhance the texture of a plate of food without altering the color of the table it’s on.

- Improved Focus: By identifying faces and eyes, the AI can ensure the most important part of the photo is always in sharp focus.

- Realistic Enhancements: This intelligence results in photos that look punchy and vibrant but still believable, as the adjustments are tailored to the content of the image.

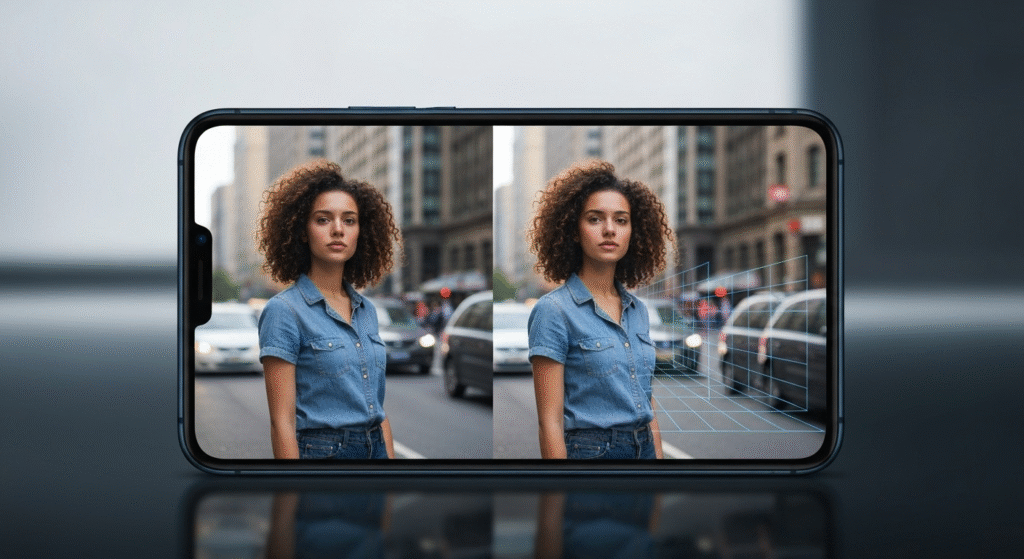

Portrait Mode: Creating Depth with an AI Brain

Portrait mode, with its beautifully blurred background (an effect known as bokeh), is one of the most popular features of modern phone cameras. On a large DSLR camera, this effect is created naturally by a wide lens aperture that creates a shallow depth of field. Since phone lenses are tiny, they can’t create this effect optically. So, they fake it with AI.

The magic behind this is a process called depth mapping. When you switch to portrait mode, the AI in your phone works to create a 3D map of the scene. It uses data from the phone’s multiple lenses or sophisticated single-lens algorithms to figure out what’s close to the camera and what’s far away.

Once it has this depth map, the process is simple. The AI keeps the subject in the foreground sharp while applying a progressive blur to everything in the background. The result is a photo that convincingly mimics the look of a professional camera.

My Experience with Portrait Mode’s Evolution

I remember the early days of portrait mode. It was a neat trick, but it often got things wrong, blurring the edges of a person’s hair or the arms of their glasses. Over the past five years, however, I’ve seen the AI get dramatically better. I recently took a photo of my brother in a busy park, and the separation between him and the complex background of trees and people was almost flawless. The AI is now much better at understanding fine details, making the effect incredibly realistic.

Pros and Cons of AI-Powered Portrait Mode

| Pros of AI Portrait Mode | Cons of AI Portrait Mode |

| Simulates the effect of expensive, professional lenses. | Can still make mistakes on complex edges like frizzy hair or fur. |

| The effect is created instantly on a device that fits in your pocket. | The quality of the blur can sometimes look a bit artificial or too uniform. |

| Many phones allow you to adjust the blur intensity after taking the photo. | It can struggle to work effectively in very dark or low-contrast scenes. |

| It makes portrait photography accessible to everyone. | The final result is an AI’s interpretation, not a true optical effect. |

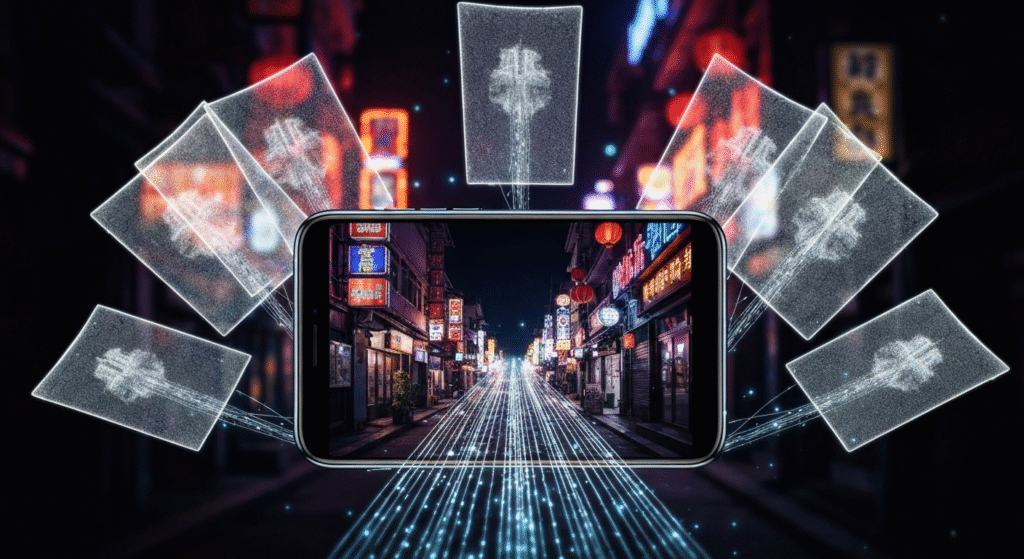

Night Mode: Seeing in the Dark Through Image Stacking

Taking photos in low light is one of the biggest challenges in photography. With a traditional camera, you have two bad options: use a slow shutter speed and risk a blurry photo from camera shake, or increase the sensor’s sensitivity (ISO) and end up with a grainy, noisy image.

Computational photography solves this problem with a technique called image stacking and noise reduction. When you press the shutter in Night Mode, your phone doesn’t just take one picture. It captures a rapid burst of many photos over a few seconds, all at different exposure levels.

Here is what the AI does next:

- Alignment: The AI analyzes all the images in the burst and perfectly aligns them, correcting for the slight handshake that occurred while you were holding the phone.

- Stacking: It then intelligently merges the best parts of each photo. It takes the sharpest details from the underexposed (darker) shots and the brightness from the overexposed (brighter) shots.

- Noise Reduction: Finally, a separate AI algorithm specifically designed to find and remove digital noise (the ugly grain) cleans up the image.

This entire process results in a single photo that is bright, sharp, and remarkably clean—something that would be impossible to capture in a single frame with a tiny phone sensor. I was at a dimly lit evening market a while back and decided to test it. I held my phone steady for about three seconds, and the resulting image was stunning. It was bright enough to see all the details on the food stalls, but it still retained the dark, atmospheric feel of the night.

Frequently Asked Questions

Does computational photography mean my phone is better than a DSLR?

Not necessarily. DSLRs and mirrorless cameras still have superior optical quality, larger sensors, and offer more manual control, which professionals prefer. However, for everyday situations, computational photography allows phones to produce images that are often more immediately pleasing and shareable with zero effort.

Can I turn these AI features off?

Some features, like Portrait Mode or Night Mode, are modes you have to select actively. However, core processes like semantic segmentation, HDR blending, and noise reduction are deeply integrated into the default camera software and are almost always working in the background to improve your photos.

Is all this processing happening in the cloud?

No, and this is crucial for privacy and speed. All of these complex AI calculations happen directly on your phone’s processor. Modern smartphones have dedicated components, often called a “Neural Engine,” designed specifically to handle these AI tasks efficiently without needing an internet connection.

Does using these features drain my phone’s battery?

Yes, it can. Computational photography is very processing-intensive. Using modes like Night Mode or Portrait Mode for extended periods can cause your phone to get warm and will use more battery than just taking standard photos.

Conclusion

The next time you take a photo with your phone, take a moment to appreciate what’s happening. It’s not just a click; it’s a complex and intelligent process. The AI in your pocket is constantly working, using techniques like semantic segmentation to understand the world, depth mapping to create artistic effects, and image stacking to see in the dark. Computational photography has leveled the playing field, empowering all of us to be better photographers. It proves that the future of photography is not just about bigger lenses and sensors but about smarter software.

Pingback: Your Feelings, Decoded: How Emotional AI Is Changing Everyday Life

Pingback: Will the AI Bubble Burst Before 2026?

Pingback: How AI in Photography Is Changing the Way We Capture and Edit Photos