Have you ever been deep in a conversation with an AI chatbot, feeling like you’re finally making progress, only for it to completely forget a key instruction you gave it just ten minutes ago? It’s incredibly frustrating. It feels like the AI has a bad memory, derailing your entire workflow. I used to face this all the time. I’d be asking it to help me outline an article, and halfway through, it would forget the target audience we had already established. This constant need to repeat myself made me question if these tools were really as smart as everyone claimed.

This frustration eventually sparked a deep curiosity. For the past five years, my work has involved exploring and writing about AI, trying to bridge the gap between complex technology and practical, everyday use. As Zain Mhd, my goal has always been to dig into the “why” behind how these tools work, offering clear explanations grounded in research and cultural context. This passion for knowledge-sharing, rather than any formal credential, pushed me to investigate why these advanced chatbots seemed so forgetful. It wasn’t just an academic question; it was about making these tools genuinely useful. What I discovered completely changed how I interact with AI.

The Big Question: Why Do AI Chatbots Forget?

Initially, I thought the problem was with the AI’s intelligence. Maybe it just wasn’t capable of holding a long train of thought. But the real answer isn’t about intelligence; it’s about architecture. AI chatbots don’t “remember” things the way humans do. They don’t have long-term memories or a persistent understanding of your past conversations.

Instead, they operate within something called a “context window.” This was the key piece of the puzzle I was missing. Understanding this single concept shifted my approach from one of frustration to one of strategy. It became clear that the problem wasn’t always the AI; it was how I was communicating with it.

My “Aha!” Moment: Discovering the Context Window

So, what exactly is a context window? The best way I can explain it is to think of it as the AI’s short-term memory. Imagine you’re talking to someone, but they can only remember the last few sentences you’ve said. Anything before that is gone. The AI context window works similarly. It’s a fixed amount of text that the AI can “see” at any given moment when generating a response. This text includes your prompts and its own previous answers.

When your conversation gets too long, the oldest messages start to fall out of this window. The AI literally cannot see them anymore, so it “forgets” them. This memory is measured in “tokens,” which are pieces of words. For example, the word “chatbot” might be one token, but “forgetting” could be split into “forget” and “ting,” making it two tokens. Every AI model has a specific context window size, ranging from a few thousand tokens to over a hundred thousand. As explained by resources like Google’s AI blog, this token limit is a fundamental constraint of current language models.

This realization was my “aha!” moment. The AI wasn’t being difficult; it was simply working within its operational limits. I just needed to learn what those limits were and how to work with them.

Putting It to the Test: My Experiments with Chatbot Memory

To really grasp this, I decided to run a few simple experiments to see the context window in action. I wanted to find the breaking point and understand its behavior firsthand.

The Simple Memory Test

My first test was basic. I started a new chat and gave the AI a very specific, unusual instruction: “From now on, whenever you mention a color, you must also mention a fruit. For example, instead of ‘the sky is blue,’ you should say ‘the sky is blue like a blueberry.’ Please remember this rule for our entire conversation. My secret word is ‘zeppelin’.”

For the first 10-15 exchanges, it worked perfectly. Then, I started feeding it long paragraphs of text to discuss, intentionally trying to fill up the context window. After a while, I asked a simple question: “What color is a stop sign?” The AI replied, “A stop sign is red.” It had forgotten the color-fruit rule. When I asked, “What was my secret word?” it replied, “I’m sorry, you haven’t told me a secret word.” The word “zeppelin” had fallen out of its memory.

The Long Conversation Test

For this experiment, I tasked an AI with helping me plan a fictional event. I laid out all the details at the beginning:

- Event: A sci-fi book club meeting.

- Theme: “Robots and Humanity.”

- Guest Speaker: Dr. Aris Thorne.

- Budget: $500.

We discussed decorations, food, and discussion topics. The conversation went on for over 30 messages. Then I asked, “Okay, based on everything, please draft a final invitation. Remember to include the guest speaker’s name.”

The AI drafted a great invitation but mentioned the theme was “Exploring Outer Space” and the guest speaker was “Dr. Evelyn Reed.” The original, critical details from the start of our chat were now outside its context window, and it confidently presented incorrect information.

The Complex Instruction Test

My final experiment involved a multi-step task. I gave the AI a block of text and a single prompt with three commands:

- Summarize this text in three sentences.

- Extract all the names of people mentioned.

- Rewrite the summary in a casual, friendly tone.

The AI successfully summarized the text and extracted the names. However, the rewritten summary was in the same formal tone as the first one. It executed the first two commands but seemed to forget the third part of the instruction by the time it got there. The complexity of the prompt stretched its focus, and the last instruction was lost.

These tests proved it. The “forgetfulness” wasn’t random. It was a direct result of the conversation exceeding the AI’s memory limit.

From Frustration to Fluency: 5 Techniques I Now Use

Understanding the problem is one thing; solving it is another. Based on my experiments, I developed a set of techniques that have dramatically improved the quality and reliability of my AI conversations.

Technique 1: The “Primer” Prompt

I now start almost every complex conversation with a “primer” prompt. This is a short paragraph that sets the stage and contains all the critical information the AI must remember.

- Example: “I need your help writing a blog post. Here are the key rules for our entire conversation: The topic is ‘The Benefits of Urban Gardening.’ The target audience is beginner gardeners living in apartments. The tone should be encouraging and simple. The article must be around 1500 words. Please confirm you understand these core instructions.”

By putting the most important rules in the very first prompt, I establish a strong foundation for the rest of the conversation.

Technique 2: Strategic Summaries

In long conversations, I now pause every 10-15 messages and ask the AI to summarize our progress and key decisions.

- Example: “Let’s quickly recap. We’ve decided on the main sections of the blog post: 1) Choosing the right plants, 2) The best containers, and 3) Watering schedules. Our target audience is still apartment dwellers. Is that correct?”

This does two things: it confirms the AI is still on track, and it re-inserts the most important information back into the context window, reinforcing it.

Technique 3: One Task, One Prompt

My complex instruction test taught me a valuable lesson. Instead of packing multiple commands into one prompt, I now break them down into smaller, sequential steps.

| Before (Less Effective) | After (More Effective) |

| “Summarize this, extract the names, and rewrite it in a friendly tone.” | Prompt 1: “Summarize this.” Prompt 2: “Now, extract all the names from the original text.” Prompt 3: “Great. Please rewrite that summary in a friendly tone.” |

This approach ensures the AI gives its full attention to each step, leading to much more accurate results.

Technique 4: Re-stating Critical Information

If a specific detail is absolutely vital, I don’t rely on the AI to remember it from 20 messages ago. I include it directly in the prompt where it’s needed most.

- Example: Instead of asking, “Now write the conclusion,” I say, “Now write the conclusion for our blog post on urban gardening for beginners. Remember to keep the tone encouraging.”

This might feel repetitive, but it’s a foolproof way to prevent the AI from forgetting crucial context.

Technique 5: Editing Your Previous Prompts

Some platforms allow you to edit your previous prompts. This is a powerful feature. If I realize I gave a vague instruction earlier that’s causing problems now, I can go back, edit the original prompt to be more specific, and regenerate the conversation from that point. This directly cleans up the context the AI is working with.

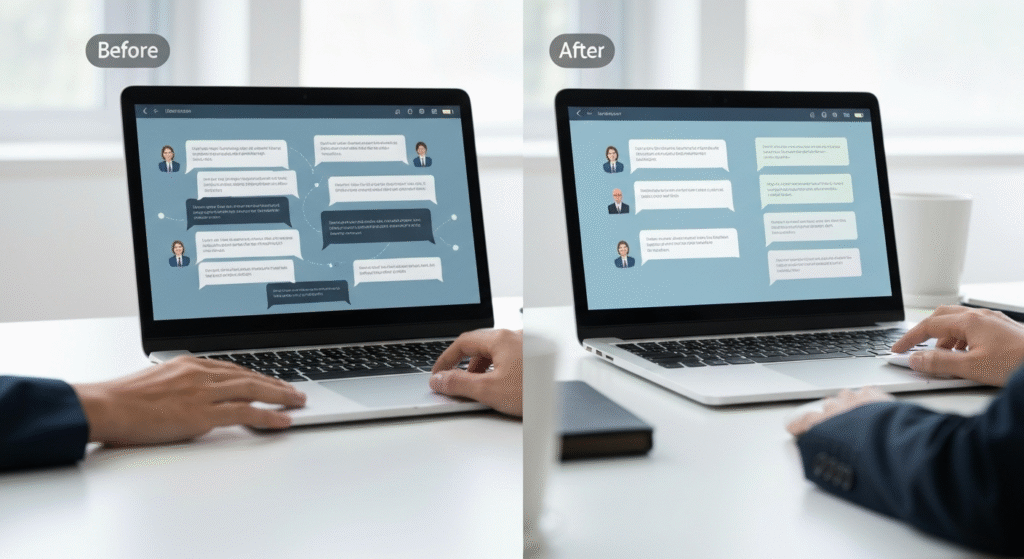

The Difference in Action: A Before-and-After Example

Let’s revisit my failed event planning experiment. Here’s how it would look using my new techniques.

Conversation 1: The “Before” Method (Failed)

Me (Prompt 1): Let’s plan a sci-fi book club meeting about “Robots and Humanity” with guest speaker Dr. Aris Thorne on a $500 budget.

(…30 messages of planning details…)

Me (Prompt 31): Okay, draft the invitation.

AI (Response 31): You’re invited to our book club meeting on “Exploring Outer Space” with special guest Dr. Evelyn Reed!

Conversation 2: The “After” Method (Success)

Me (Prompt 1 – Primer): We are planning a sci-fi book club meeting. Core Details: Theme is “Robots and Humanity.” Guest Speaker is Dr. Aris Thorne. Budget is $500. Please keep these in mind for all tasks.

AI (Response 1): Understood. Theme: “Robots and Humanity,” Speaker: Dr. Aris Thorne, Budget: $500. How can I help first?

(…15 messages of planning details…)

Me (Prompt 16 – Summary): Let’s summarize. We’ve chosen a venue and planned the menu, staying within the $500 budget. The theme remains “Robots and Humanity” with Dr. Thorne. Correct?

AI (Response 16): That is correct.

(…15 more messages of planning…)

Me (Prompt 31 – Re-stating Info): Perfect. Now please draft the final invitation. Make sure to prominently feature the guest speaker, Dr. Aris Thorne, and the theme, “Robots and Humanity.”

AI (Response 31): Get ready for an exciting discussion! You’re invited to our book club meeting on “Robots and Humanity” with our esteemed guest speaker, Dr. Aris Thorne!

The difference is night and day. The second conversation is controlled, accurate, and produces the desired outcome because I actively managed the context window.

What This Taught Me About Working with AI

Learning about context windows fundamentally changed my relationship with AI. I no longer see chatbots as all-knowing oracles but as incredibly powerful tools that operate on a specific set of rules. My role as the user is not just to ask questions but to guide the conversation and manage the information flow.

This shift in perspective is empowering. It means that with the right techniques, we can overcome one of the biggest limitations of current AI and turn a frustrating experience into a productive partnership. It’s not about waiting for developers to build a perfect AI; it’s about becoming a more skilled user of the tools we have today.

Frequently Asked Questions (FAQs)

Do all AI chatbots have the same context window size?

No, the size varies significantly between different models and even different versions of the same model. Generally, newer and more advanced models have larger context windows, allowing for longer and more complex conversations.

Is a bigger context window always better?

Mostly, yes. A larger context window allows the AI to remember more of the conversation, reducing the chances of it “forgetting” important details. However, processing a very large context can sometimes take more time and computational resources.

Can I find out the exact context window of a chatbot I’m using?

Usually not directly. Most commercial chatbots don’t explicitly state their context window size in tokens. However, you can often find this information for the underlying models (like GPT-4 or Claude 3) in technical documents or announcements from the AI labs that create them.

Will AI context windows get bigger in the future?

Almost certainly. Expanding the context window is a major area of research and development in the AI industry. We can expect future models to handle much longer conversations and documents with greater accuracy.

Conclusion

That feeling of an AI forgetting your instructions isn’t just in your head—it’s a real technical limitation. But it’s not a dead end. By understanding the concept of the context window and actively managing it with techniques like primer prompts, strategic summaries, and clear, single-task instructions, you can take control of your AI conversations. My journey from frustration to fluency showed me that the most powerful AI interactions happen when human guidance works in sync with the machine’s capabilities. Give these methods a try; you might be surprised at how much smarter your chatbot suddenly seems.