My first real interaction with an AI writing tool felt like trying to give directions to someone who didn’t speak my language. I’d type in a simple command like, “Write an article about remote work,” and get back a wall of text so generic it was practically useless. It was bland, robotic, and completely missed the point. I spent more time rewriting the AI’s mess than it would have taken to write the piece from scratch. It was frustrating, and I almost dismissed the technology entirely as just another overhyped gimmick.

For years, I’ve been fascinated by the practical applications of artificial intelligence. My journey into this world wasn’t driven by a formal degree but by a deep curiosity about how these tools can change the way we work and create. After spending the last five years deeply embedded in this space, I’ve learned that the secret to unlocking an AI’s potential isn’t about knowing code; it’s about knowing how to ask the right questions. My goal is to share the practical lessons I’ve learned through countless hours of trial and error, helping you move from frustration to fluency without needing to be a tech wizard.

The Early Days: My First Clumsy Prompts (And Why They Failed)

When I first started, I treated the AI like a glorified search engine. I gave it short, vague commands and expected brilliant results. This approach, I quickly learned, was the fastest path to disappointment. My early failures almost always fell into two categories.

The “One-Liner” Mistake

My initial prompts were incredibly brief. I thought simplicity was key, but I was just being lazy. I was providing the AI with a topic but giving it zero direction.

My Prompt:

“Write about the benefits of reading.”

The AI’s Output:

Reading is a beneficial activity. It can improve your vocabulary. Reading also helps you learn new things and can reduce stress. People who read more are often more knowledgeable. It is a good hobby to have for both children and adults.

The output wasn’t wrong, but it was completely soulless. It read like a middle school book report. The reason it failed is that I gave the AI no guardrails. It didn’t know who the audience was, what tone to use, what format to follow, or what specific goal I had in mind. It simply produced the most average, statistically likely response based on the vast amount of text it was trained on.

The Vague Instruction Trap

My next evolution was trying to refine the output, but my instructions were still far too subjective. I knew what I wanted, but the AI couldn’t read my mind.

My Prompt:

“Take this sentence and make it better: ‘The software helps users to do their tasks more efficiently.'”

The AI’s Output:

“This software solution empowers users to complete their tasks with enhanced efficiency.”

While technically different, the new sentence wasn’t much of an improvement. It just swapped out simple words for more corporate-sounding ones. The term “better” is meaningless without context. Did I want it to be more professional? More casual? More descriptive? Shorter? The AI had to guess, and its guess was as generic as my prompt.

| Common Mistake | Why It Fails | How to Fix It |

| The One-Liner | Provides no context, audience, or goal. | Specify the format, tone, and target reader. |

| Vague Adjectives | Words like “good” or “better” are subjective. | Define your terms. Instead of “make it better,” try “make it more concise and use a confident tone.” |

| No Persona | The AI defaults to a neutral, generic voice. | Assign the AI a role or persona to guide its style. |

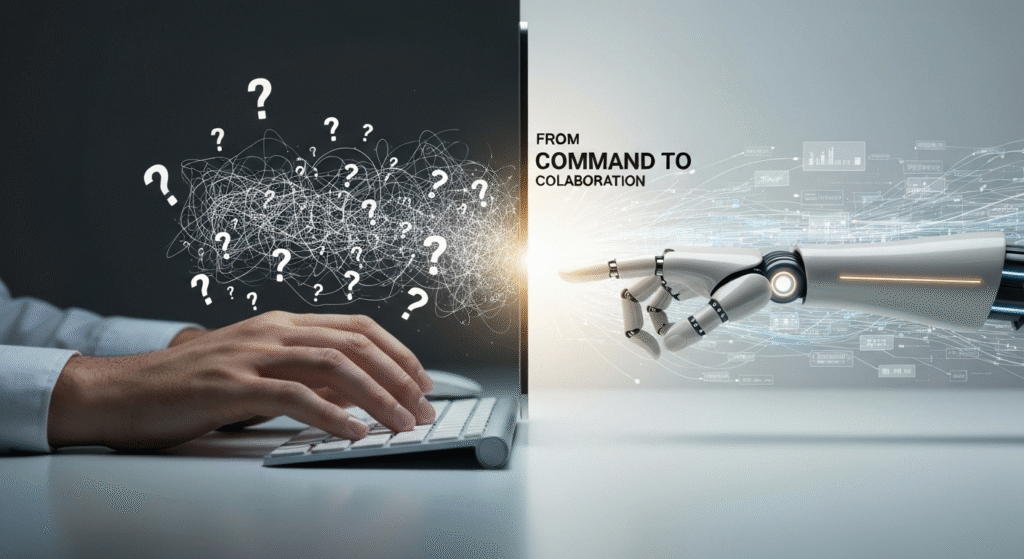

The Turning Point: AI as a Collaborator, Not a Vending Machine

The real breakthrough came when I stopped treating the AI like a vending machine where I insert a prompt and get a finished product. I started treating it like a very knowledgeable, very literal-minded junior assistant. An assistant who is eager to help but needs extremely clear instructions to do a good job.

The core principle I learned is this: The quality of the AI’s output is a direct reflection of the quality of your input.

This mental shift changed everything. Instead of just giving a command, I began providing context, setting expectations, and guiding the AI toward my desired outcome. It became a conversation, a collaboration.

Core Techniques That Transformed My Results

Through hundreds of experiments, I landed on a few core techniques that consistently deliver better results. These methods are about adding layers of detail to your prompts to remove guesswork for the AI.

The Power of Persona and Role-Playing

This is the single most effective technique I found. By telling the AI who it is, you instantly shape its tone, style, and vocabulary. Instead of its default neutral voice, it adopts the characteristics of the persona you assign.

Let’s revisit the failed product description idea.

Before (No Persona):

“Write a product description for a new coffee mug.”

After (With Persona):

“Act as an expert marketing copywriter for a premium home goods brand called ‘Aura Home.’ Your target audience is young professionals aged 25-40 who value minimalist design and quiet morning rituals. Write a 100-word product description for our new handcrafted ceramic mug, ‘The Dawn.’ Focus on the feeling of warmth, the smooth texture, and how it elevates a simple cup of coffee into a mindful experience. Use a warm, inviting, and slightly sophisticated tone.”

The difference in the output is night and day. The “Before” prompt would likely produce a simple list of features (e.g., “This mug holds 12 oz. It is dishwasher safe.”). The “After” prompt provides a role, a target audience, a tone, and specific emotional points to hit. The AI is no longer guessing; it has a complete creative brief to work from.

Context is King: Providing the “Why” and “Who”

An AI doesn’t understand your underlying goals unless you state them explicitly. Providing context about the purpose of the task and the intended audience is crucial.

Before (No Context):

“Summarize this article: [article text]”

After (With Context):

“I am a project manager preparing a weekly update for my executive team. My goal is to quickly inform them of our project’s progress and highlight any roadblocks. They are very busy and only have time for the high-level points. Summarize the following project report [report text] into three clear, concise bullet points. Each bullet point should start with the project area (e.g., Design, Engineering) and end with the current status.”

The “After” prompt tells the AI the who (executive team), the why (a weekly update), and the how (three bullet points with a specific format). This context ensures the summary is tailored to the specific need, rather than being a generic abstract of the text.

Iteration and Refinement: The Art of the Follow-Up

Your first prompt is often just the opening line of a conversation. The real magic happens in the follow-up prompts. Don’t be afraid to ask the AI to tweak, expand, or completely rethink its initial response.

Here’s a real example of an iterative workflow:

- My First Prompt: “I need to write an email to my team about a new workflow for submitting project updates. Generate a draft.”

- AI Output: (A very long, formal email draft).

- My Follow-Up: “This is too formal. Make it more direct and friendly. Cut the length by about half and use bullet points for the new steps.”

- AI Output: (A much better, more scannable draft).

- My Final Tweak: “This is great. Can you just add a line at the end encouraging the team to ask questions in our weekly sync?”

By refining the output step-by-step, I guided the AI to the perfect final product. This process is faster than writing it myself from scratch and yields a much better result than a single, lazy prompt.

Advanced Prompting Patterns I Discovered

Once you master the basics, you can start using more structured prompting patterns to tackle complex tasks. These require a bit more setup but can dramatically improve the AI’s reasoning and accuracy.

The “Chain-of-Thought” Method

Sometimes, when you ask an AI a complex question, it rushes to an answer and makes a mistake. Chain-of-Thought (CoT) prompting is a way to slow it down and force it to “show its work.” You simply add the phrase “think step-by-step” to your prompt.

This encourages the model to break down a problem into smaller parts, work through the logic, and then arrive at a conclusion. As researchers from Google found, this simple tweak can significantly improve performance on tasks that require arithmetic, commonsense, and symbolic reasoning.

Before (No CoT):

“A farmer has 15 chickens and sells 3. Each remaining chicken lays 4 eggs a week. He packages the eggs in cartons that hold 6 eggs each. How many full cartons can he make in a week?”

An AI might rush and miscalculate.

After (With CoT):

“A farmer has 15 chickens and sells 3. Each remaining chicken lays 4 eggs a week. He packages the eggs in cartons that hold 6 eggs each. How many full cartons can he make in a week? Let’s think step-by-step.“

With this prompt, the AI will first outline its logic:

- Calculate remaining chickens: 15 – 3 = 12 chickens.

- Calculate total eggs per week: 12 chickens * 4 eggs/chicken = 48 eggs.

- Calculate the number of full cartons: 48 eggs / 6 eggs/carton = 8 cartons.

- Final Answer: The farmer can make 8 full cartons.

This method not only leads to more accurate answers but also allows you to see the AI’s reasoning process, making it easier to spot any errors.

Zero-Shot vs. Few-Shot Prompting

These are two different approaches to teaching the AI what you want.

- Zero-Shot Prompting: This is what we do most of the time. You ask the AI to perform a task without giving it any prior examples.

- Few-Shot Prompting: This is where you provide the AI with 1-3 examples of the task before asking it to do your specific one. This is incredibly effective for tasks involving formatting, rephrasing, or classification.

Let’s say I want to turn bland product features into exciting customer benefits.

| Prompt Type | Example Prompt |

| Zero-Shot | “Turn this feature into a benefit: ‘The backpack is made of waterproof nylon.'” |

| Few-Shot | “I will give you a feature, and you will rewrite it as a customer benefit. Here are some examples: – Feature: ’10-hour battery life.’ -> Benefit: ‘Work an entire day without ever searching for a power outlet.’ – Feature: ‘Stainless steel construction.’ -> Benefit: ‘Built to last a lifetime, so you can buy it once and be done.’ Now, do this one: – Feature: ‘The backpack is made of waterproof nylon.’ ->” |

The Few-Shot prompt gives the AI a clear pattern to follow, resulting in a much stronger, more creative output that matches the style of the examples provided.

Frequently Asked Questions (FAQs)

How specific is too specific for a prompt?

It’s very difficult to be too specific. In most cases, more detail and context lead to better results. The only time it becomes a problem is if your instructions are contradictory. It’s better to be overly detailed than too vague.

Does the same prompt work on all AI models?

Not always. Different AI models (like those from Google, Anthropic, or OpenAI) have different strengths, weaknesses, and training data. A prompt that works perfectly on one might need to be tweaked for another. Experimentation is key.

Can AI perfectly mimic my writing style?

It can get surprisingly close if you provide it with several examples of your writing (a “few-shot” approach for style). However, it will always lack your unique experiences and perspective. It’s best used to create a first draft in your style that you then refine with your own human touch.

Is prompt engineering a skill worth learning for the future?

Absolutely. Learning to communicate effectively with AI is becoming a fundamental form of digital literacy. It’s not about becoming a coder; it’s about becoming a better instructor, a better editor, and a better collaborator with these incredibly powerful tools.

Conclusion: From Frustration to Fluency

My journey from writing clumsy one-liners to crafting detailed, multi-layered prompts has been transformative. I no longer fight with the AI. Instead, I guide it. The process is faster, more creative, and produces results that are infinitely more valuable. The key was a simple change in perspective: stop commanding and start collaborating.

Learning to write a good prompt isn’t about mastering a complex technical skill. It’s about learning to communicate with clarity, context, and intent. It’s a skill that takes practice, but the payoff is enormous. By giving the AI the detailed instructions it needs, you free up your own mental energy to focus on what humans do best: strategy, creativity, and adding that final, authentic touch.